Use the Bogus library to generate and insert 1 million dummy product data into the SQL Server database

We need to create 1 million dummy product data into the SQL Server database, which can be used for development or performance testing purposes.

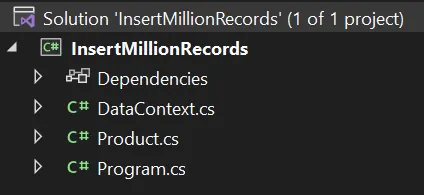

The Project

The project is a console application using .NET 6.0 as a framework.

The project name is InsertMillionRecords.

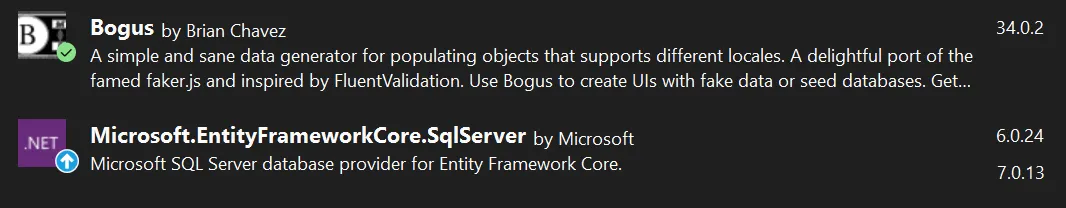

We will use the Bogus package to generate random product data.

We use Entity Framework Core as the data access layer.

Product Model

To model the Products table in the database, we need to create the Product class:

public class Product

{

public int Id { get; set; }

public string Code { get; set; }

public string Description { get; set; }

public string Category { get; set; }

public decimal Price { get; set; }

}

Entity Framework Data Context

Next, we create the Entity Framework data context class:

using Microsoft.EntityFrameworkCore;

namespace InsertMillionRecords;

public class DataContext : DbContext

{

public DataContext(DbContextOptions<DataContext> options) : base(options)

{

}

public DbSet<Product> Products { get; set; }

}

The Program.cs File

Initialize Data Context

First, we need to initialize the data context:

var connectionString = "Data Source=localhost; Initial Catalog=Product; Integrated Security=True";

var contextOptionsBuilder = new DbContextOptionsBuilder<DataContext>();

contextOptionsBuilder.UseSqlServer(connectionString);

var context = new DataContext(contextOptionsBuilder.Options);

We've made things simpler by hardcoding the connection string. No need to worry about it!

Create Database

Every time the script runs, we need to ensure that the database is recreated.

await context.Database.EnsureDeletedAsync();

await context.Database.EnsureCreatedAsync();

Setup Bogus Faker Class

First, we initialize the Faker<Product> object.

Next, we use the RuleFor() method to set up each property of the Product class.

The self-explained code:

var faker = new Faker<Product>();

faker.RuleFor(p => p.Code, f => f.Commerce.Ean13());

faker.RuleFor(p => p.Description, f => f.Commerce.ProductName());

faker.RuleFor(p => p.Category, f => f.Commerce.Categories(1)[0]);

faker.RuleFor(p => p.Price, f => f.Random.Decimal(1, 1000));

Generate 1 Million Dummy Product Data

var products = faker.Generate(1_000_000);

The products variable now contains 1 million of product data!

Create 10 Batches of Insertion

There is a possibility that a timeout will occur if we insert 1 million records at a time.

Therefore, we will split the process into 10 batches. Each batch will insert 100K records at a time.

var batches = products

.Select((p, i) => (Product: p, Index: i))

.GroupBy(x => x.Index / 100_000)

.Select(g => g.Select(x => x.Product).ToList())

.ToList();

Insert Each Batch into the Database

var count = 0;

foreach (var batch in batches)

{

batchCount++;

Console.WriteLine($"Inserting batch {count} of {batches.Count}...");

await context.Products.AddRangeAsync(batch);

await context.SaveChangesAsync();

}

The complete code of the Program.cs file:

using Bogus;

using InsertMillionRecords;

using Microsoft.EntityFrameworkCore;

using System.Diagnostics;

// initialize data context

var connectionString = "Data Source=localhost; Initial Catalog=Product; Integrated Security=True";

var contextOptionsBuilder = new DbContextOptionsBuilder<DataContext>();

contextOptionsBuilder.UseSqlServer(connectionString);

var context = new DataContext(contextOptionsBuilder.Options);

// create database

await context.Database.EnsureDeletedAsync();

await context.Database.EnsureCreatedAsync();

// setup bogus faker

var faker = new Faker<Product>();

faker.RuleFor(p => p.Code, f => f.Commerce.Ean13());

faker.RuleFor(p => p.Description, f => f.Commerce.ProductName());

faker.RuleFor(p => p.Category, f => f.Commerce.Categories(1)[0]);

faker.RuleFor(p => p.Price, f => f.Random.Decimal(1, 1000));

// generate 1 million products

var products = faker.Generate(1_000_000);

var batches = products

.Select((p, i) => (Product: p, Index: i))

.GroupBy(x => x.Index / 100_000)

.Select(g => g.Select(x => x.Product).ToList())

.ToList();

// insert batches

var stopwatch = new Stopwatch();

stopwatch.Start();

var count = 0;

foreach (var batch in batches)

{

count++;

Console.WriteLine($"Inserting batch {count} of {batches.Count}...");

await context.Products.AddRangeAsync(batch);

await context.SaveChangesAsync();

}

stopwatch.Stop();

Console.WriteLine($"Elapsed time: {stopwatch.Elapsed}");

Console.WriteLine("Press any key to exit...");

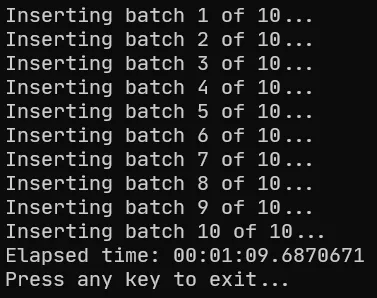

Run the Application

Now, let’s run the application. We can use Release mode to fasten the process.

It took 1 minute and 9 seconds on my machine:

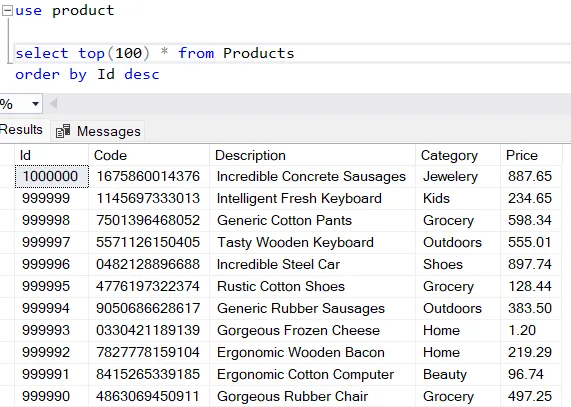

And now we have 1 million records in the Products table:

I am planning to use these dummy data for testing the full-text search feature in SQL Server.

The source code of this post can be found here: https://github.com/juldhais/InsertMillionRecords

Thanks for reading 👍