This is a behavioral pattern where the main goal is to split a complex job into multiple steps, each with a specific functionality. It’s really good for several types of architectures. For a system composed of various microservices with numerous components, this is a way to keep things easier to read and maintain.

This pattern has several key concepts similar to the chain of responsibility one.

Main concept

The core basis is to create a chain of multiple steps where each step works with the result from the previous one.

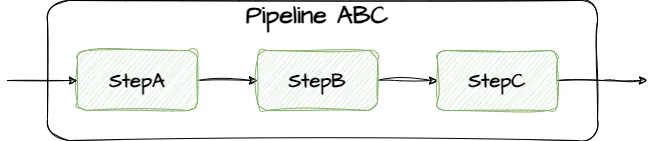

Pipeline pattern with a chain of steps

In the above image, we can see a simple example of a pipeline definition. StepA is the first that receives the input and makes something. After that, the result is passed on to Step B. This one applies other specific logic and returns the result to the next step. The last one in the chain is the StepC, and this one is responsible for publishing the results of the entire pipeline.

When should I use it?

It’s a good design pattern to simplify complex business processes. Imagine you have a problem that requires multiple steps to achieve a final result. Once you have a good separation of responsibilities you are in a good spot to define the concern for each step, and at the end for each pipeline.

Another nice point is the fact that you could align your documentation with your code since each step will have a definition and that definition will have a direct relation with your step in code.

In a simple system, it could be too much to solve an easy problem. However, in a huge and complex system, this will simplify your life for sure in the short and long term.

Pipeline? DevOps? CI/CD?

Well, good point. The pipelines that we know from continuous integration and continuous delivery are aligned in some way with this design pattern. A DevOps pipeline is a series of steps that must be performed to deliver a new version of software. They are the same concept for different purposes.

Let’s talk about code

To implement this pattern we could create a generic approach to reuse this in other projects. You can check the entire solution here in my repository on GitHub.

We should have the following objects:

- Pipeline — a chain of steps.

- Step — a specific task.

- Data — the information that passes between steps.

Next, we can see the code logic for each one.

public interface IPipeline<T>

{

public string Name { get; set; }

public IReadOnlyCollection<IStep> Steps { get; }

void WithStep(IStep step);

Task<T> StartAsync(IData data);

}

IPipeline interface

A pipeline is composed of its name, a list of steps, and 2 main methods. One is for adding a step, and the other is to start the execution of the pipeline.

public interface IStep

{

Task<IData> ExecuteAsync(IData data);

}

IStep interface

Here, we can see the step that simply has a method to execute itself.

The data is represented by an empty interface named IData.

Pipeline implementation

In the next code section, we can check the pipeline implementation.

public class Pipeline<T> : IPipeline<T> where T : IData

{

private readonly List<IStep> _steps = new();

public string Name { get; set; }

public IReadOnlyCollection<IStep> Steps => _steps;

public Pipeline(string name)

{

Name = name;

}

public void WithStep(IStep step)

{

_steps.Add(step);

}

public async Task<T> StartAsync(IData input)

{

IData result = input;

foreach (var step in Steps)

{

try

{

result = await step.ExecuteAsync(result);

}

catch (Exception)

{

throw;

}

}

return (T)result;

}

}

Pipeline implementation

The method WithStep adds an IStep to the list of steps. StartAsync is responsible for executing these steps. After each step's execution, its result is passed to the next step. The steps are executed in the same order they were added to the pipeline.

Usage sample

The following code snippet shows how to configure and execute a pipeline.

var pipeline = new Pipeline<OutputData>("MyFirstPipeline");

pipeline.WithStep(new ToUpperStep());

pipeline.WithStep(new TextLengthStep());

var input = new InputData

{

Text = "Hello World!"

};

var output = await pipeline.StartAsync(input);

Console.WriteLine($"Starting pipeline {pipeline.Name}...");

Console.WriteLine($"Input text: '{input.Text}'");

Console.WriteLine($"Length of text is: {output.Result}");

Example of usage

First of all, a new pipeline instance is created. Next, we can add steps to the pipeline. The last thing is the creation of input data to send to the pipeline. At this moment, we are ready to start the execution of the pipeline. The result is stored in a variable where the type is the same as the one defined during pipeline creation (e.g., OutputData in this sample).

Why use generics for Pipeline?

The idea is to allow a pipeline definition to have a specific expected result type. As we can see, in the previous sample of usage, the pipeline was defined with OutputData as a result type. So because of that the result of StartAsync is an object of that type. This allows us to have a custom output type for each pipeline definition which creates a good extensibility.

Ideas for enhancements

Given that this was a basic example of this pattern implementation, here are some valuable improvements that could be made.

- Generic steps — The first thing in my mind is to convert steps to be also generic where they could use a specific interface. The input model just needs to also implement that interface. With this, my step just depends on its own

IData definition interface instead of being related to the type of the pipeline.

- Multiple pipelines — If we want to have more than one pipeline definition in the same deployment unit, we should create a discover class to get the instance of the pipeline.

- Context for pipeline — For cases where the output from a step is not used in the next one, it will be good to have a kind of context to be maintained along pipeline execution and to allow all steps to access it and get some value. This could be implemented with a dictionary of string as a key and object as a value.

We need to keep in mind that the sample provided is a very simple one just to get the idea behind this pattern. As with any other pattern, we could extend the features according to our requirements. This reminds me of the YAGNI mantra. Let’s try to not kill an ant with a bazooka!

Conclusion

In my design pattern article series, I normally say that a pattern is good for some scenarios, never for all of them. Does one pattern fit all? No, such a thing doesn’t exist. We have effective patterns for several problems, and it’s beneficial to be familiar with them. However, it’s also crucial to understand them well enough to decide which one to use for a specific problem.

My experience with using this pattern has been very positive. I have been working with this type of design a long time ago and this makes all sense in the context of a complex system with lots of pieces to get the final goal.

This pattern requires an initial effort to create the core algorithm, but once that is done, we simply need to create steps and integrate them into a pipeline definition.