In today’s story we are going to talk about what Tasks are and have a brief introduction on the Task Parallel Library (TPL). We will also give a small preview of the Async & Task-Based Asynchronous Patterns.

Tasks

To begin talking about what tasks actually are, let’s take a step back and consider answering one very basic question that often causes confusion amongst developers: What is the difference between threads and tasks?

Threads are the lowest-level constructs of multithreading. They are basic units of execution that are allocated processor time by the OS and contain a sequence of program instructions that can be managed independently by a thread scheduler, which is part of the OS. Working directly with threads can become challenging. For example, it can be quite a complicated process to return a value from a separate worker thread.

Tasks, on the other hand, are a higher-level .NET abstraction that basically represents a promise of separate work that’ll be completed in the future. For example, a Task<T> is nothing but a task that comes with the promise of returning a value of type T when the task completes. They are compositional in nature, capable of returning values and being chained, at any amount, by using task continuations. They are also capable of using the thread pool and extremely handy for being used for I/O-bound operations.

Note: Just using a Task in .NET code does not mean there are separate new threads involved.

Generally, when using Task.Run() or similar constructs, a task runs on a separate thread (mostly a managed thread-pool one), managed by the .NET CLR. But that depends on the actual implementation of the task.

So, now a question arises. Should we use threads or tasks in our code?

A Thread, being a low-level thing, gives the maximum flexibility to control execution and manage some attached resources. The work on the newly created thread starts immediately, but creating them in code might cause some serious issues as well. Creating, starting, and stopping threads take time and consumes resources. On the other hand, thread pool threads are not created or terminated once done and are kept to be re-used again. If we keep creating a huge number of new threads manually and there are many more threads than CPU cores in our machine, the OS needs to do frequent context switches, which are heavy. Finally, managing and synchronizing them can be complex, in terms of correct coding.

That’s why, for most purposes in modern .NET programming, it is recommended to use tasks instead. This, of course, does not necessarily mean “new” threads. For example, when typing new Thread(…).Start() in our code, this actually creates and starts a new thread. But typing Task.Run(…) simply queues the work on the managed ThreadPool. The ThreadPool manages the work and assigns it to a thread from the pool whenever there is one available.

Note: The way Task and modern ThreadPool are created, they are pretty much multi-core aware. That means, if there are multiple CPUs available in the system, tasks will try to utilize them in an efficient way.

There are basically three different options for starting a new Task in our code:

new Task(Action).Start()

The new Task(Action).Start() command creates a new Task and gives it the Action to run and then it starts it. Generally, we should avoid using this option, as it needs synchronization to avoid race conditions, where multiple threads try to start a task (i.e., try to call the .Start() method).

Task.Factory.StartNew(Action)

The Task.Factory.StartNew(Action) command starts the task and then returns a reference to that task. This option is considered safe and saves the synchronization cost, and generally we prefer using it over the first one.

Task.Run(Action)

The Task.Run() command queues the specified Action delegate to run on the ThreadPool. It then takes a thread from the ThreadPool and runs our code on that thread as per schedule and availability. Once the Action is completed, the thread is released back to the ThreadPool. This differs if a Task is marked to be a LongRunning task. For a long-running task, a new thread is used. A long-running (usually 0.5 seconds or more) operation should be run as LongRunning as that’ll not block thread pool threads, which can efficiently run smaller tasks and rotate.

So, which one of the above 3 options to use?

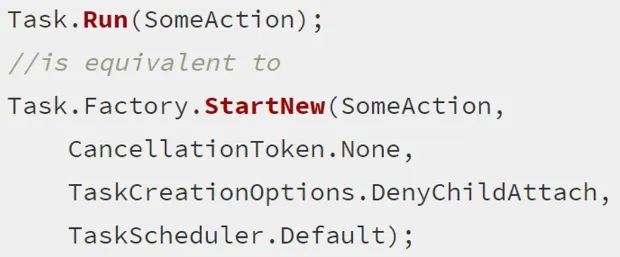

When we simply want to offload some activity to a background (thread-pool) thread, we should stick to Task.Run(), which is simply a short-hand for Task.Factory.StartNew(), with default parameters that work fine in most of the cases.

Task.Run is equivalent to Task.Factory.StartNew with some default parameters

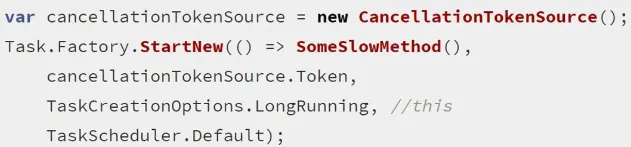

If you need specific customization like LongRunning process or a non-default TaskScheduler then go for Task.Factory.StartNew().

Declare the Task as LongRunning using Task.Factory.StartNew

Note: The following code samples are just here to illustrate how Tasks can be used in the context of .NET and to show how things work under the hood in terms of the threads involved. Realistically, in real-world scenarios, calling constructs like .Result, or .Wait() on the Tasks is not a recommended way to use them. We should instead utilize the asynchronous programming constructs, like the async and await keywords in C#, to avoid blocking the underlying thread from waiting the Task’s result.

Let’s now see some examples of Task starting options in C#:

Console.WriteLine($"Main starts execution on Thread {Environment.CurrentManagedThreadId}.");

// Option 1: new Task(Action).Start();

// Task that does not return a value.

var task = new Task(SimpleMethod);

task.Start();

Console.WriteLine($"Main continues execution on Thread {Environment.CurrentManagedThreadId} after starting {nameof(SimpleMethod)} task.");

// Task that returns a value.

var taskThatReturnsValue = new Task<string>(MethodThatReturnsValue);

taskThatReturnsValue.Start();

Console.WriteLine($"Main continues execution on Thread {Environment.CurrentManagedThreadId} after starting {nameof(MethodThatReturnsValue)} task - Option 1.");

// Block the current thread until the Task is completed.

taskThatReturnsValue.Wait();

// Get the result from the Task operation.

// Blocking operation on current thread.

Console.WriteLine(taskThatReturnsValue.Result);

Console.ReadLine();

static void SimpleMethod()

{

Console.WriteLine($"Hello from {nameof(SimpleMethod)} on Thread {Environment.CurrentManagedThreadId}.");

}

static string MethodThatReturnsValue()

{

// This simulates a computational intensive operation.

Thread.Sleep(2000);

return $"Hello from {nameof(MethodThatReturnsValue)} on Thread {Environment.CurrentManagedThreadId}.";

}

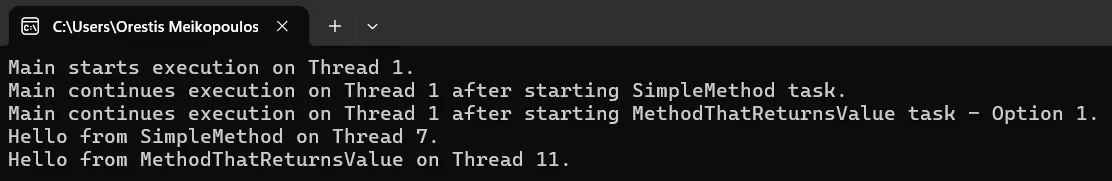

Task introduction code sample output

As we can see, the Main method creates two new tasks by utilizing the new Task(Action).Start() command. This creates and starts two new tasks. But, Main, as we see in the output, continues its execution on the Main thread (Thread 1). The two Console.WriteLine messages from the two methods we are calling through the newly created tasks are coming afterward from different threads (Thread 7 and Thread 11). The SimpleMethod just prints a message immediately in the console. The MethodThatReturnsValue is simulated as a computational intensive operation because of the Thread.Sleep(2000) command we have. At some point in Main, we are doing a .Wait() on the created task that runs the MethodThatReturnsValue method. This results in blocking the current thread (Thread 1) until the Task is completed and afterward we are getting the result from the Task by accessing its .Result property.

Let’s quickly see the other two options for creating a new task in C# code as well:

Console.WriteLine($"Main starts execution on Thread {Environment.CurrentManagedThreadId}.");

// Option 2: Task.Factory.StartNew(Action);

var cancellationTokenSource = new CancellationTokenSource();

var task2 = Task.Factory.StartNew(() => MethodThatReturnsValue(),

cancellationTokenSource.Token,

TaskCreationOptions.LongRunning,

TaskScheduler.Default);

// Execution can continue from here on the original thread.

Console.WriteLine($"Main continues execution on Thread {Environment.CurrentManagedThreadId} after starting {nameof(MethodThatReturnsValue)} task - Option 2.");

// Get the result from the Task operation.

// Blocking operation on current thread.

Console.WriteLine(task2.Result);

// Option 3: Task.Run(Action);

// Will run on separate thread.

var task3 = Task.Run(() => MethodThatReturnsValue());

// Execution can continue from here on original thread.

Console.WriteLine($"Main continues execution on Thread {Environment.CurrentManagedThreadId} after starting {nameof(MethodThatReturnsValue)} task - Option 3.");

// Get the result from the Task operation.

// Blocking operation on current thread.

Console.WriteLine(task3.Result);

Console.ReadLine();

static string MethodThatReturnsValue()

{

// This simulates a computational intensive operation.

Thread.Sleep(2000);

return $"Hello from {nameof(MethodThatReturnsValue)} on Thread {Environment.CurrentManagedThreadId}.";

}

In the above examples, we simulated a computational intensive operation (MethodThatReturnsValue method) by calling the Thread.Sleep(2000) command, which blocks the current thread for 2 seconds. This operation is called a CPU-bound operation. Generally, there are two types of operations:

- CPU-bound (or computation-intensive) operations: These are in-process operations, which use resources of a local machine (e.g., CPU, memory)

- I/O-bound operations: These are out-of-process calls (e.g., database, file system, network call). I/O operations can take any amount of time because they wait for external input. While making an I/O bound call, we do not want to starve the resources of our local machine, or the web server where the application is running. Instead, we would like to have the ability to make the call, immediately release the resources and introduce a callback, which will be called when we get the results back from that call.

Earlier we saw an example of how Tasks can be used with CPU-bound operations. At the same time, Tasks can also be used when we want to work with I/O-bound operations. Let’s see an example of this:

var task = Task.Run(() => GetPosts("https://jsonplaceholder.typicode.com/posts"));

// Here we explicitly do not block the main thread from executing SomethingElse()

SomethingElse();

try

{

// When you type .Wait() or .Result on the task object

// you say that you want to wait for the result to come before continuing.

//task.Wait();

Console.WriteLine(task.Result);

}

// Every time we try to access the .Result from a task it can throw an AggregateException

catch (AggregateException ex)

{

Console.Error.WriteLine(ex.Message);

}

Console.ReadLine();

static void SomethingElse()

{

Console.WriteLine("Some other dummy operation happening in main thread.");

}

static string GetPosts(string url)

{

using var client = new HttpClient();

return client.GetStringAsync(url).Result;

}

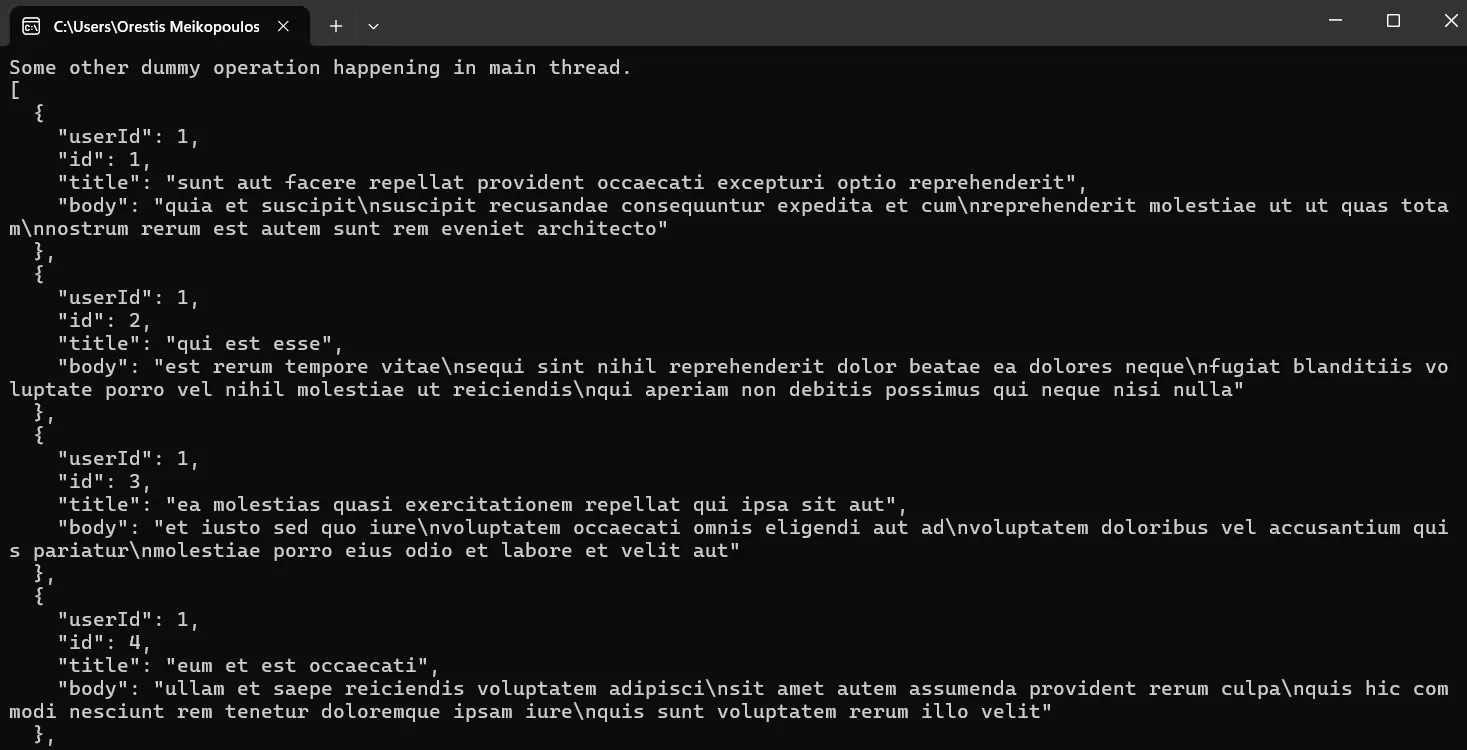

Here we are using Task.Run command to start a new Task that calls the GetPosts method. This method will make an API call and fetch some posts from the internet. After starting the task for getting the data from the /posts URL, we explicitly do not block the main thread from executing the SomethingElse method, which will be executed on the same thread. After this, we are calling task.Result on the Task object and we just print the contents from the API call to the console. By calling .Result, we are basically saying that we want to wait for the result to come before continuing our Main method execution. The output of the above code would be:

Task I/O code sample output

There are times when we want to execute some logic after a task is completed, like when having one task passing on execution to another task to continue. Traditionally, we use callbacks for such a situation. In the Task Parallel Library (TPL), the same functionality is provided by continuation tasks. A continuation is an asynchronous task that is invoked by another task, which is known as the antecedent, when the antecedent finishes, like we can see in the following example:

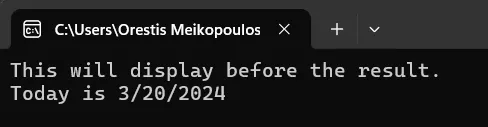

Task<string> antecedent = Task.Run(() => {

// Simulate a long running task

Task.Delay(2000).Wait();

return DateTime.Today.ToShortDateString();

});

// We want here to pass the antecedent data to the continuation

// The `t` argument is same as `task`

Task<string> continuation = antecedent.ContinueWith(t => {

return "Today is " + t.Result;

});

// Method execution will continue here normally

Console.WriteLine("This will display before the result.");

// Note: Using continuation.Result makes the process synchronous,

// execution WILL WAIT here on current thread for the task to complete

Console.WriteLine(continuation.Result);

Console.ReadLine();

Task chaining code sample output

Now, let’s turn our attention on how we can handle exceptions in Tasks. If a Task throws an exception, do we simply wrap it inside a try-catch block to handle it?

If the Task is being run on another thread, like when we use the Task.Run method, our current thread doesn’t get to know about that. So, if any exception is thrown inside the method that the other thread executes, that doesn’t affect the current thread directly. But, at the same time, the application fails internally to do what it was supposed to do.

The possible options for handling the above scenario are:

- Check for any fault on the

Task object, using its .IsFaulted property.

- Use a continuation task and pass the

TaskContinuationOptions.OnlyOnFaulted option inside ContinueWith method. In this case, if the method that the Task runs throws an exception, the Task terminates & the exception details are attached to the returned Task.

- Wait for the result of the

Task to come back by using its .Wait() method or its .Result property and surround this with a try/catch block.

Below you can see an example showing the three different options we mentioned:

void DoAsyncWorkV1()

{

var task = Task.Run(() => SlowBuggyMethod());

task.ContinueWith(t =>

{

if (t.IsFaulted)

{

Console.WriteLine($"Task failed with message: {t.Exception.Message}.");

}

else

{

Console.WriteLine("Task completed successfully.");

}

});

}

// With TaskContinuationOptions

void DoAsyncWorkV2()

{

var task = Task.Run(() => SlowBuggyMethod());

task.ContinueWith(t =>

Console.WriteLine("Exception: " + t.Exception!.Message),

TaskContinuationOptions.OnlyOnFaulted);

}

// With .Wait() or .Result

void DoAsyncWorkV3()

{

var task = Task.Run(() => SlowBuggyMethod());

try

{

task.Wait(); // or task.Result

}

catch (AggregateException ex)

{

Console.WriteLine($"Task failed with message: {ex.InnerException!.Message}.");

}

}

static void SlowBuggyMethod()

{

Thread.Sleep(1000);

throw new Exception("Something went wrong.");

}

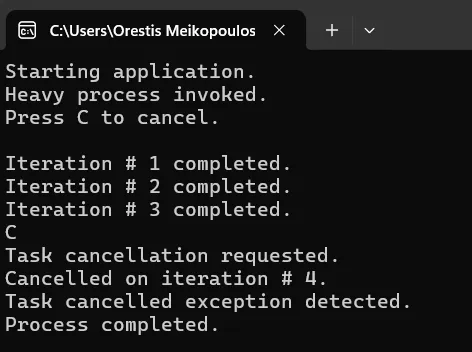

What about canceling tasks? To be able to cancel a task, we need to start a task and passing into it a cancellation token. The CancellationToken is generated with the use of a CancellationTokenSource object. Cancellation can be requested on the cancellation token source later.

Please note that just requesting a cancellation does not guarantee immediate cancellation of the task, or even any response at all. It is up to the underlying Task code to periodically check for cancellation request and respond accordingly, as we can see in the following example:

Console.WriteLine("Starting application.");

var source = new CancellationTokenSource();

// Assuming the wrapping class is CancellableTaskTest

var task = CancellableTaskTest.CreateCancellableTask(source.Token);

Console.WriteLine("Heavy process invoked.");

Console.WriteLine("Press C to cancel.");

Console.WriteLine("");

char ch = Console.ReadKey().KeyChar;

if (ch == 'c' || ch == 'C')

{

source.Cancel();

Console.WriteLine("\nTask cancellation requested.");

}

try

{

task.Wait();

}

catch (AggregateException ex)

{

if (ex.InnerExceptions.Any(e => e is TaskCanceledException))

{

Console.WriteLine("Task cancelled exception detected.");

}

else

{

throw;

}

}

catch (Exception)

{

throw;

}

finally

{

source.Dispose();

}

Console.WriteLine("Process completed.");

Console.ReadLine();

class CancellableTaskTest

{

public static Task CreateCancellableTask(CancellationToken ct)

{

return Task.Factory.StartNew(() => CancellableWork(ct), ct);

}

private static void CancellableWork(CancellationToken cancellationToken)

{

if (cancellationToken.IsCancellationRequested)

{

Console.WriteLine("Cancelled work before start.");

cancellationToken.ThrowIfCancellationRequested();

}

for (int i = 0; i < 10; i++)

{

Thread.Sleep(1000);

if (cancellationToken.IsCancellationRequested)

{

Console.WriteLine($"Cancelled on iteration # {i + 1}.");

// The following lien alone is enough to check and throw

cancellationToken.ThrowIfCancellationRequested();

}

Console.WriteLine($"Iteration # {i + 1} completed.");

}

}

}

Task cancellation code sample output

Task Parallel Library (TPL)

Let’s now make a small introduction to the Task Parallel Library. TPL is a set of public types & APIs in two namespaces:

System.ThreadingSystem.Threading.Tasks

It simplifies the process of adding parallelism and concurrency to applications. The vision behind TPL is for the developers to be able to maximize the performance of code while not having to worry about the low-level challenges of threading.

The TPL scales the degree of concurrency dynamically to most efficiently use all the processors that are available by:

- Handling the partitioning of work.

- Scheduling threads to run on the

ThreadPool.

- Providing ways for canceling tasks.

It is generally the preferred way to write multithreaded and parallel code. Keep in mind the following:

- Not all code is suitable for parallelization.

- Threading of any type has an associated overhead. For example, a function that runs in just a few milliseconds using multiple threads might not help the overall performance.

- In some cases, multithreading may be slower than just simple plain sequential code. For example, a function having a for loop with very few iterations.

TPL is based on the concept of a Task, which, as we already saw earlier, represents an asynchronous operation. The CLR will try to run our code in parallel. Internally, TPL makes use of Tasks in an optimized way, so they can pretty much run in parallel on different CPU cores if available.

We have two different terms in TPL, Task Parallelism, and Data Parallelism.

Task Parallelism

Task parallelism refers to one or more independent tasks running concurrently. Tasks provide two primary benefits:

- More efficient use of system resources. Behind the scenes, tasks are queued to the

ThreadPool, which has been enhanced with algorithms that determine and adjust to the number of active threads.

- More programmatic control (support for

Task waiting, cancellation, continuations, robust exception handling, etc.) than what is possible when using a thread.

Data Parallelism

On the other hand, data parallelism refers to scenarios in which the same operation is performed in parallel on elements in a source collection or array. The source collection is partitioned so that multiple threads can operate on different segments concurrently.

Async & Task-Based Asynchronous Pattern

Modern apps make extensive use of file and networking I/O. I/O APIs traditionally block by default, resulting in poor UX and hardware utilization. Task-based async APIs and the language-level asynchronous programming model invert this model, making async execution the default one. Async code has the following characteristics:

- Handles more server requests by yielding threads to handle more requests while waiting for I/O requests to return.

- Enables UIs to be more responsive by yielding threads to UI interaction while waiting for I/O requests and by transitioning long-running work to other CPU cores.

- Many of the newer .NET APIs are asynchronous.

Tasks, in this context, are constructs used to implement what is known as the Promise Model of Concurrency. They offer you a “promise” that work will be completed at a later point, letting you coordinate with the promise through the use of a clean API:

Task represents a single operation that does not return a valueTask<T> represents a single operation that returns a value of type T.

Tasks are abstractions of work happening asynchronously, and not an abstraction over threading. By default, tasks execute on the current thread and delegate work to the Operating System, as appropriate. Optionally, tasks can be explicitly requested to run on a separate thread via the Task.Run API.

There are three different asynchronous programming patterns:

Task-based Asynchronous Pattern (TAP)

Introduced in the .NET Framework 4 and recommended approach to asynchronous programming in NET. The async and await keywords in C# and the Async and Await operators in Visual Basic add language support for TAP.

Event-based Asynchronous Pattern (EAP)

Event-based legacy model for providing asynchronous behavior. No longer recommended for new development.

Asynchronous Programming Model (APM)

Legacy model that uses the IAsyncResult interface to provide asynchronous behavior. It is also no longer recommended for new development.

With the introduction of tasks, TAP is the recommended way of doing task-based programming. To follow the TAP pattern, every method using an async mechanism needs to:

- Be declared with an “Async” suffix

- Have a return value of

Task or Task<TResult>

- Be overloaded to accept a

CancellationToken and / or an IProgress<T> to support cancellation or progress reporting

- Make sure that returns quickly to the caller. The initial synchronous phase should be the smallest possible.

- Make sure that it frees up the UI / main / thread pool thread as quickly as possible if it is an I/O bound task (out-of-process call).

You can find the above examples in the following places:

Summary

In conclusion, Tasks provide a higher-level abstraction over threads, offering compositional nature, easy chaining, and efficient utilization of system resources. Whether you’re working on CPU-intensive computations or asynchronous I/O operations, tasks provide a flexible and intuitive way to manage concurrent workloads. Moreover, the Task Parallel Library simplifies the process of adding parallelism to your applications, handling the partitioning of work, scheduling threads, and providing mechanisms for cancellation and exception handling. Finally, with the Task-Based Asynchronous Pattern (TAP), asynchronous programming has become more accessible and intuitive, enabling developers to write responsive and scalable applications.