As a developer, which areas should we focus on for performance optimizations? How can we measure the impact? Read this post to learn the framework.

Performance optimization is any software or hardware modifications that make the platform faster and more scalable, improving latency or throughput or both.

You can spend a ton of time working on different areas to improve the performance, but which do you need to focus on the most?

This post will show you a framework I developed to classify performance changes. I use this framework to understand which areas my team or I should spend more time on improving the performance and in which cases I should stop working on them. I have been using it for years, and I decided to write it down and share it. It’s not perfect but has been working very well for me.

This post uses examples for latency improvements, but it could also apply to throughput improvement.

Macro and Micro Optimizations

As developers, we often encounter areas where changes could improve performance. In some cases, the changes are straightforward, such as removing an unnecessary query, avoiding N+1 queries in the backend by batching, or eliminating unnecessary calls to the server in the frontend. In other cases, the changes are more complicated and require re-architecting the solution, such as implementing a different caching strategy across systems or implementing a completely new, much faster service.

This framework classifies two types of Performance optimizations: Micro and Macro Optimizations:

Image 1. Micro and Macro Optimizations

Micro Optimizations

They are optimizations applied to very specific areas. In most cases, the impact is usually medium-low (e.g. improving the performance of a low-used endpoint). In some rare cases, the impact is very high, e.g. reducing the overhead time for all the authenticated requests by improving the authentication flow.

Due to the effort, the most common optimizations changes fall into this category.

Some examples:

- Caching and batching specific APIs/Models.

- Removing unnecessary calls.

- Reducing memory allocations.

- Removing unnecessary required libraries in the frontend.

- Reducing the bite-size consumption by the client.

- Adding Database indexes.

A common mistake I see for optimizations that fall into this category is not preventing the system from having the same performance degrade in the future. Let’s use an example: Ignacio improved the endpoint X by removing the N + 1 queries but didn’t prevent any other developer from re-adding the N + 1 again. A complete fix would require Ignacio to write tests that prevent the endpoint from re-adding the N + 1 queries in the future. Don’t miss this critical step!

An interesting thing about this category is that the time for fixing the issue is usually short compared to the time spent investigating. The investigation can take many days, and the fix could be as simple as a line change, but the impact can be tremendous!

Macro Optimizations

This is the most fun category for me. Usually, the effort and scope are medium/high. It requires a lot of investigation, validation and testing, and the developer needs to prove that the effort is worth doing.

This type of optimization usually makes the platform more complex to understand. An example would be introducing a new caching system. It makes the platform X times faster with the cost of making the platform Y more complex.

Some examples:

- Introduce a new caching strategy across the platform.

- New technology (database, library etc.).

- Database migrations.

- Models redesign.

- Re-architecture of the platform.

A real-world example of a Macro Optimization: At Shopify, we removed the page param from all the APIs and replaced it with cursors. This allows all pagination queries to use cursor-based pagination base instead of offset-based pagination, improving the performance drastically. The effort and impact were very high, and we had to convince different people that it was worth doing it. If you want to learn more about this change, see this post.

Best time spent by developers

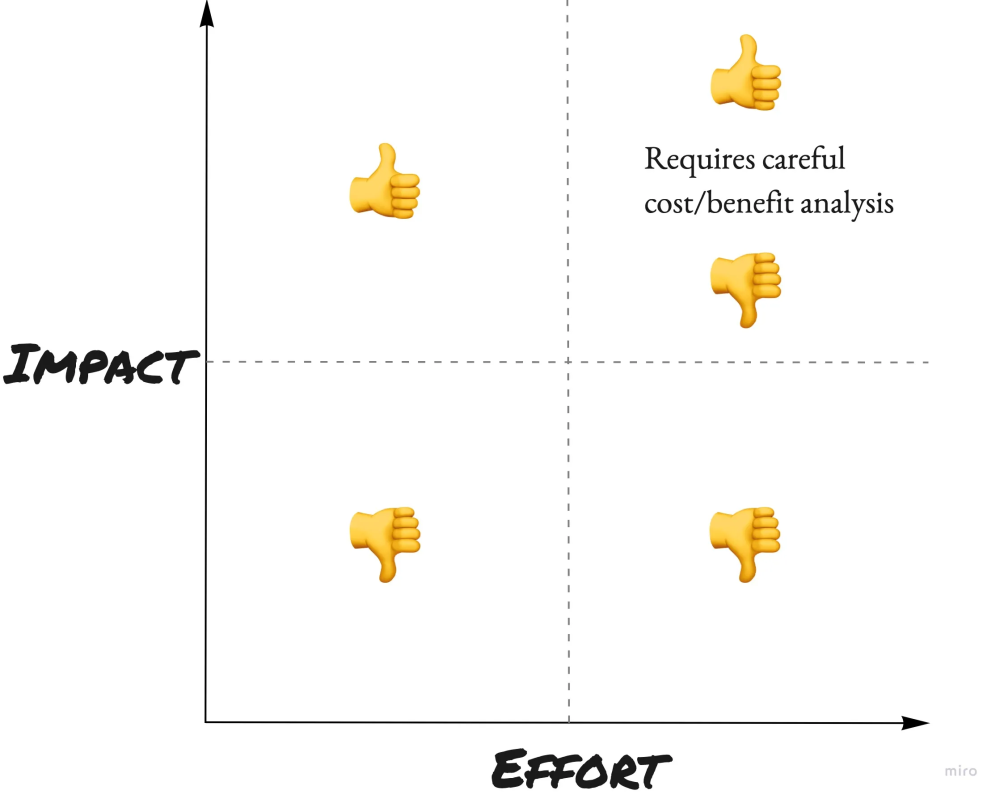

When deciding which areas we should focus on, the three main things we should look at are:

- Impact: There are different ways to measure the impact depending on the platform (e.g. #users impacted, #requests / #total requests).

- Effort: How much time and resources it takes.

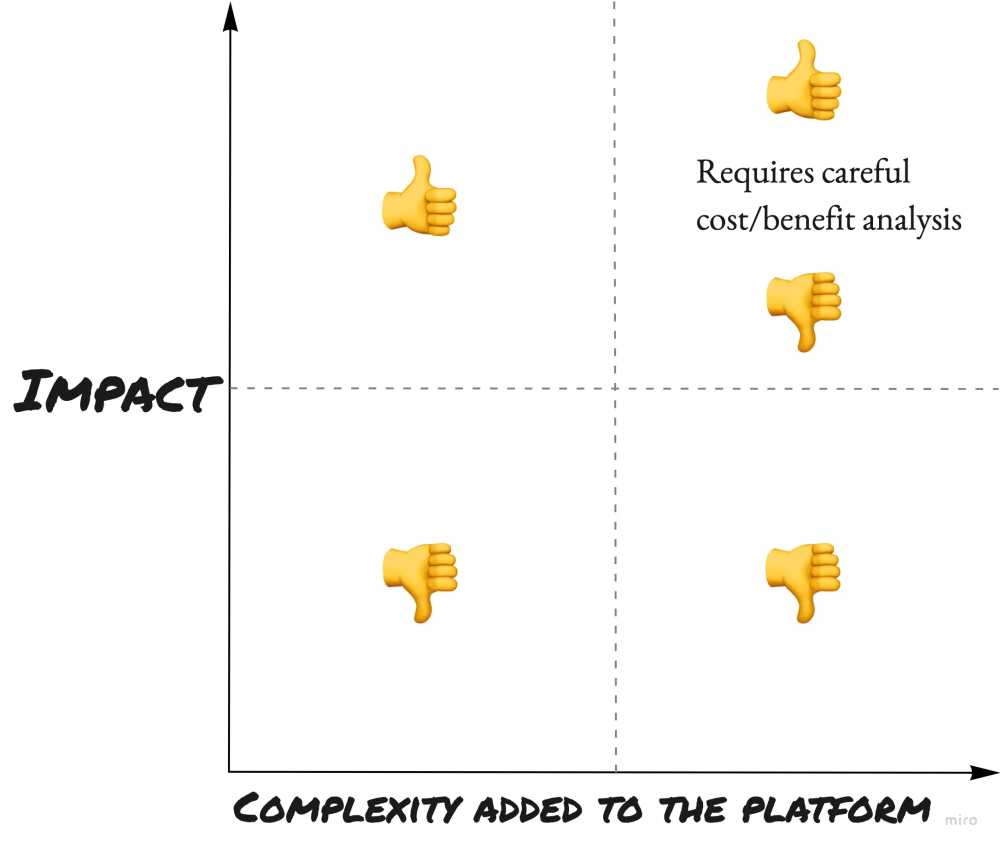

- Complexity added to the platform: How much more complex is the platform after applying the optimization? The complexity is usually high for Macro optimizations.

Image 2. Micro and Macro Optimization classification.

Any change that is a Macro Optimization and low impact must be avoided! Micro optimizations with high impact are a must-have.

Only a portion of Macro optimization with high impact should be done, but developers must justify its worth the effort.

Any change that adds high complexity to the platform must be avoided unless the impact is very high! These optimizations are usually projects that take a significant amount of time and make the platform too complex that it’s not worth the effort. It might solve a specific problem at the cost of making the platform less scalable. See the following example:

Imagine if Airbnb had a very complex system to calculate the prices. The price of a “stay” considers many factors such as demand, offer, location, price history, user location, and many more. We can introduce a cache layer for pricing only, based on all the factors that affect the price. This could work well, but it makes the system dependent on this cache. Any new factor added to the pricing must invalidate the cache, and any factor missed causes money lost!

Conclusion

This framework gives you an idea of which performance optimizations are worth the effort. It’s not perfect, it’s in no way exhaustive, but it has worked pretty well for me.

When working on performance, consider effort, impact and complexity added to the platform.

Finally, performance should be part of the developers’ core, and it shouldn’t be considered a secondary thing. Ideally, we ship software that is performing optimally. However, in practice, it is very hard, and post-project performance initiatives are required.