Introduction

One common way to speed up our applications is by introducing a cache. Typically, the first option that comes to mind is using a MemoryCache (RAM) to save some data in order to speed up retrieval.

This approach works well for monolithic applications. However, in the case of microservice solutions where each service may scale independently, using a local cache can break the stateless rule of the microservice architecture pattern.

For this reason, a better solution is to use a Distributed Cache. In .NET, the common interaction with a distributed cache is through an interface called IDistributedCache.

Distributed Cache

When working with IDistributedCache in a .NET application, you can choose which implementation to use.

The available implementations in .NET 8 include:

- Memory Cache

- Redis

- SQL Server

- NCache

- Azure CosmosDB

One of the most commonly used implementations is Redis. In the following part of this article, I will use Redis for the implementation.

For more detailed information, refer to the official documentation:

Distributed caching in ASP.NET Core

Learn how to use an ASP.NET Core distributed cache to improve app performance and scalability.

Important parameters

When working with Distributed Cache, there are two important parameters to know:

- Sliding Expiration: A span of time within which a cache entry must be accessed before the cache entry is evicted from the cache.

- Absolute Expiration: The point in time at which a cache entry is evicted. By default, an entry persisted in the cache does not expire.

For more detailed information, refer to the official documentation:

CacheItemPolicy.AbsoluteExpiration Property (System.Runtime.Caching)

Gets or sets a value that indicates whether a cache entry should be evicted at a specified point in time.

CacheItemPolicy.SlidingExpiration Property (System.Runtime.Caching)

Gets or sets a value that indicates whether a cache entry should be evicted if it has not been accessed in a given span of time.

Cache service

One approach we can use in our application is to directly interact with the distributed cache where needed.

For example, if a service requires caching, we request an instance of IDistributedCache. This approach works but may not be optimal as it can lead to repetitive operations.

A preferable approach is to create a dedicated service for cache interactions and use it throughout the application. This centralizes cache management and can help avoid redundancy and improve maintainability.

Sample project

Here a sample project i have prepare with a sample of a cache service:

GitHub - GabrieleTronchin/CacheService: An abstraction over IDistributedCache

An abstraction over IDistributedCache. Contribute to GabrieleTronchin/CacheService development.

Project structure

This project has the following structure:

- docker: Contains a Docker Compose file with a configured Redis container.

- src: Contains the project source code.

- test: Contains the project tests.

Docker Compose

Here is the content of the docker-compose file that you will find on GitHub:

version: '3'

services:

redis-monitoring:

image: redislabs/redisinsight:latest

pull_policy: always

ports:

- '8001:8001'

restart: unless-stopped

networks:

- default

redis:

image: redis:latest

pull_policy: always

ports:

- "6379:6379"

restart: unless-stopped

networks:

- default

networks:

default:

driver: bridge

As you can see, the compose file configures two services:

- redis: The instance of Redis.

- redisinsight: A container that helps you interact with Redis. This container can be used for debugging purposes.

This Docker Compose setup is just for development or testing purposes. Be aware that Redis has changed its license policy.

You can find more info about on this previus article:

API project overview

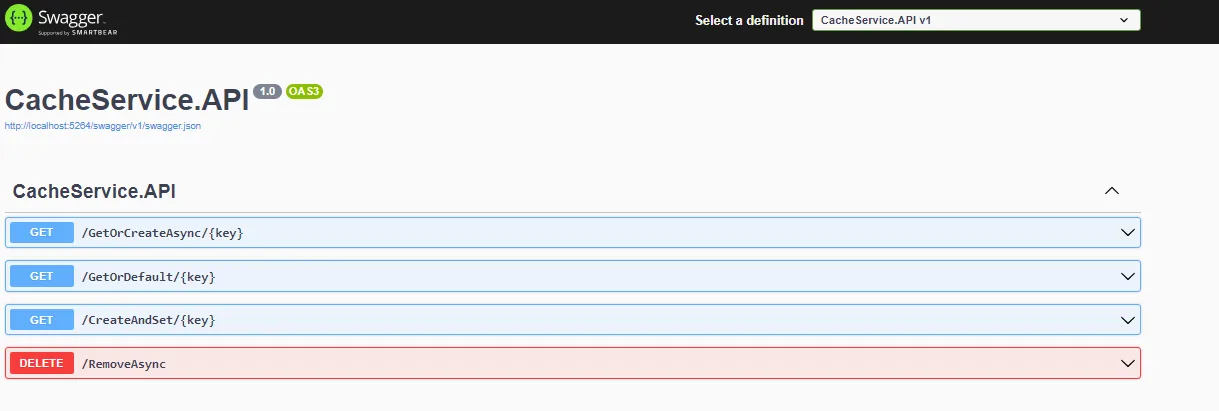

The API project exposes a few methods that interact with the IDistributedCache.

Here is a sample of what will appear when you start the application:

Here the code behind the API:

app.MapGet("/GetOrCreateAsync/{key}", async (string key,ICacheService cache) =>

{

return await cache.GetOrCreateAsync(key, () => Task.FromResult($"{nameof(cache.GetOrCreateAsync)} - Hello World"));

})

.WithName("GetOrCreateAsync")

.WithOpenApi();

app.MapGet("/GetOrDefault/{key}", async (string key, ICacheService cache) =>

{

return await cache.GetOrDefaultAsync(key, $"{nameof(cache.GetOrDefault)} - Hello World");

})

.WithName("GetOrDefault")

.WithOpenApi();

app.MapGet("/CreateAndSet/{key}", async (string key, ICacheService cache) =>

{

await cache.CreateAndSet(key, $"{nameof(cache.CreateAndSet)} - Hello World");

})

.WithName("CreateAndSet")

.WithOpenApi();

app.MapDelete("/RemoveAsync", (string key, ICacheService cache) =>

{

cache.RemoveAsync(key);

})

.WithName("RemoveAsync")

.WithOpenApi();

Service registration

The service is configured to use an in-memory implementation of IDistributedCache if no Redis configuration has been provided.

In the API project, it's just necessary to use the extension AddServiceCache.

builder.Services.AddServiceCache(builder.Configuration);

The detail of the extension is here:

public static IServiceCollection AddServiceCache(this IServiceCollection services, IConfiguration configuration)

{

services

.AddOptions<CacheOptions>()

.Bind(configuration.GetSection("Cache"))

.ValidateDataAnnotations();

if (!string.IsNullOrEmpty(configuration.GetSection("RedisCache:Configuration").Value))

{

services.AddStackExchangeRedisCache(options =>

{

configuration.Bind("RedisCache", options);

});

}

else

{

services.AddDistributedMemoryCache();

}

services.AddTransient<ICacheService, CacheService>();

return services;

}

As you can see, it searches for a key called “Cache” in the app settings to try to initialize the CacheOptions object. This option contains the value for a global Sliding Expiration value.

ServiceCache

Here is the interface of the CacheService:

public interface ICacheService

{

Task CreateAndSet<T>(string key, T thing, int expirationMinutes = 0) where T : class;

Task<T> CreateAndSetAsync<T>(string key, Func<Task<T>> createAsync, int expirationMinutes = 0);

Task<T> GetOrCreateAsync<T>(string key, Func<Task<T>> create, int expirationMinutes = 0);

Task<T> GetOrDefault<T>(string key);

Task<T> GetOrDefaultAsync<T>(string key, T defaultVal);

Task RemoveAsync(string key);

}

It provides some utility methods to interact with the IDistributedCache.

In particular, it provides different ways to retrieve data and set an automatic default value or a creation approach.

Let’s see the GetOrCreateAsync for example:

public async Task<T> GetOrCreateAsync<T>(string key, Func<Task<T>> create, int expirationMinutes = 0)

{

var bytesResult = await _cache.GetAsync(key);

if (bytesResult?.Length > 0)

{

using StreamReader reader = new(new MemoryStream(bytesResult));

using JsonTextReader jsonReader = new(reader);

JsonSerializer ser = new();

ser.ReferenceLoopHandling = ReferenceLoopHandling.Ignore;

ser.TypeNameHandling = TypeNameHandling.All;

ser.StringEscapeHandling = StringEscapeHandling.EscapeNonAscii;

var result = ser.Deserialize<T>(jsonReader);

if (result != null)

{

return result;

}

}

return await this.CreateAndSetAsync<T>(key, create, expirationMinutes);

}

GetOrDefault, on the other hand, does not try to initialize the distributed cache. It just returns the default value if the key is not found.

public async Task<T> GetOrDefault<T>(string key)

{

var bytesResult = await _cache.GetAsync(key);

if (bytesResult?.Length > 0)

{

using StreamReader reader = new(new MemoryStream(bytesResult));

using JsonTextReader jsonReader = new(reader);

JsonSerializer ser = new();

ser.TypeNameHandling = TypeNameHandling.All;

ser.StringEscapeHandling = StringEscapeHandling.EscapeNonAscii;

var result = ser.Deserialize<T>(jsonReader);

if (result != null)

{

return result;

}

}

return default;

}

FusionCache

The approach to creating a service to manage a distributed cache can be useful to avoid code duplication and have an abstraction layer. This might be suitable for simple scenarios or if you want to have full control of the codebase or limit external dipendency.

For more complex scenarios, a cool library that you can use is FusionCache, that’s essentially a Service Cache on steroids, providing advanced resiliency features and an optional distributed second-level cache.

GitHub — ZiggyCreatures/FusionCache: FusionCache is an easy to use, fast and robust hybrid cache with advanced resiliency features.

It was born after years of dealing with all sorts of different types of caches: memory caching, distributed caching, http caching, CDNs, browser cache, offline cache, you name it. So I've tried to put together these experiences and came up with FusionCache.